Go scheduler details

Table of Contents

Scheduler was implemented by Dmitry Vyukov in go 1.1 and lives in runtime/proc.go.

Good resources

https://www.youtube.com/watch?v=-K11rY57K7k — video by Dmitry Vyukov (slides as pdf)

https://www.ardanlabs.com/blog/2018/08/scheduling-in-go-part2.html — nice article by Bill Kennedy in 3 parts. Most of the images below are taken from this article.

Main ideas

Goroutines are very light weight (~2KB+) and very cheap to operate. You can have millions of them in your program. Context switch between goroutines is also pretty cheap.

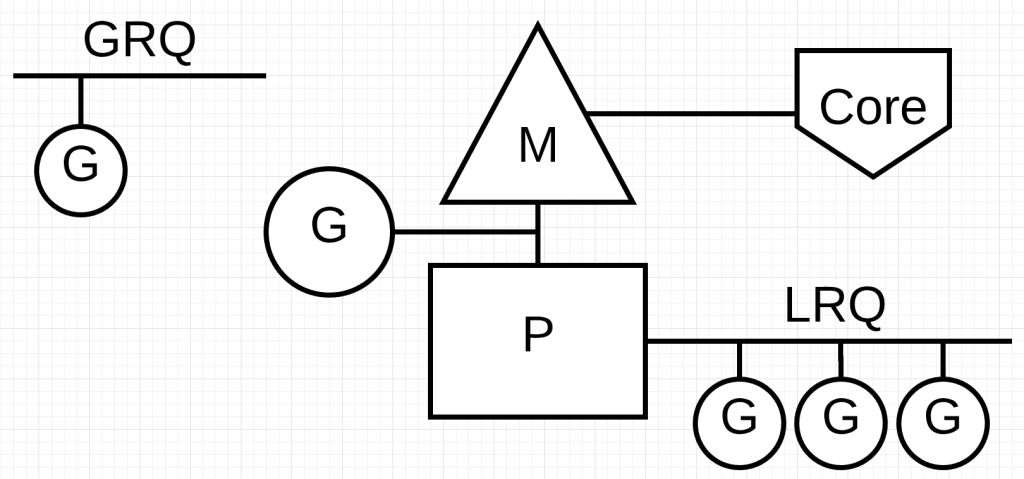

There are 5 main entities Scheduler operates:

- [P], Processors (equal to number of physical processing units like processor cores)

- /M\, Machines (OS threads)

- (G), Goroutines (goroutines).

- GRQ, Global Run Queue keeps runnable goroutines

- LRQ, Local Run Queue per Processor keeps runnable goroutines per Processor to keep data locality.

Goroutine states

are the same as OS thread states:

Waiting, Runnable, Executing.

Scheduler in go is cooperative

which means it is only allowed to interrupt code execution in defined safe points (it has an exception to that rule though). OS thread is preemptive, and it is unpredictable from the code, when the execution will be paused.

At the same time, there is no control from the program on scheduler’s behaviour (except for Gosched and LockOSThread).

Cooperative context switching

could happen when one of 4 events happening:

gokeyword is excuted- Garbage Collector is executing

- System Call happens

- Synchronization/orchestration happens — atomic, mutex, channel operation is executed

Preemptive context switching

To prevent functions from being executed for too long without giving a chance for other runnable goroutines to execute, a function prologue is placed at the beginning of every function and checks the execution time slice for goroutine ( this is a time limit of ~10ms). If time is out, it tricks the stack size which forces the function to stop and switch to runtime. Runtime fixes the stack size back and places the goroutine to LRQ.

Handling syscalls

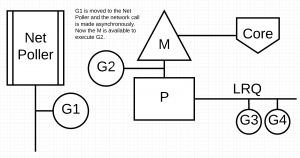

If a syscall is asynchronous, goroutine is moved to the NetPoller* with a state Waiting, and then returned back to the LRQ.

*NetPoller is an abstraction on group of syscalls (epoll 0n Linux, epoll_create, epoll_ctl, epoll_wait; kqueue on MacOS/BSD)

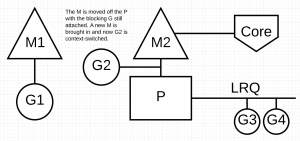

If a syscall is synchronous/blocking, it should be kept linked to the OS Thread. In this case, both M and G are parked, and P is getting a new M from the pool to execute another goroutine from LRQ.

More details on how go works with syscalls you can find here and here.

Work stealing

If LRQ is empty, Processor is stealing from a different LRQ, GRQ, Net Poller.

Scheduler logic in details

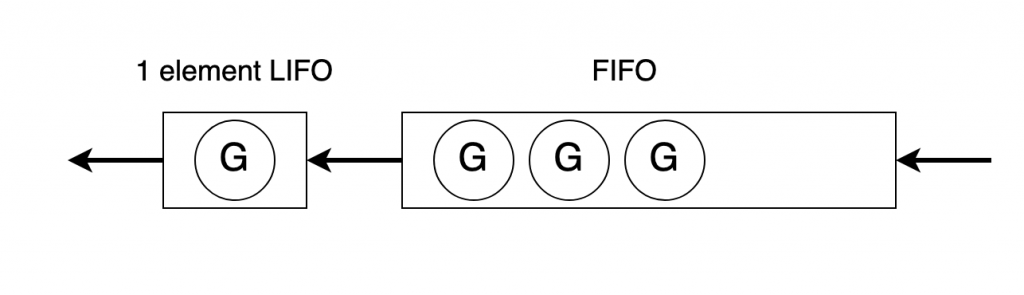

LRQ

Local Run Queue is FIFO, so every goroutine waits a comparable amount of time to be executed. But this means data locality is broken as every goroutine has its own stack.

To not break this CPU-level optimization, they use a 1 element LIFO queue to switch between 2 last executed goroutines.

Also, this LIFO-goroutine cannot be stolen by another P. Limited by ~10ms as well.

Finding a runnable goroutine

They want to achieve fairness for all goroutines while keeping the overhead as low as possible.

Goroutine preemption: if goroutine is running for too long, preemption is used (goroutine moved to GRQ) (~10ms, v).

Even load for Ps: work stealing from different Processor’s LRQ.

FIFO and LIFO for Local Run Queues: for switching 2 last goroutines; it can happen up to «time slice» period for preemption (~10ms); goroutine cannot be stolen by another processor for ~3microseconds.

GRQ unblocking: every 61st schedTick, Processor is forced to pull from Global Run Queue (v).

Network Poller starvation is prevented by checking it in a separate thread as it involves a sys call (v).

Logic

- every 61st tick, pull from GRQ (no matter what)

- check LRQ

- try to steal from another P’s LRQ

- check GRQ

- check NetPoller

Resources

- https://www.youtube.com/watch?v=-K11rY57K7k — video by Dmitry Vyukov (slides as pdf)

- https://www.ardanlabs.com/blog/2018/08/scheduling-in-go-part2.html — nice article by Bill Kennedy in 3 parts.

- https://www.youtube.com/watch?v=rloqQY9CT8I — simplified (RU)

- https://go.dev/src/runtime/HACKING — detailed article

- https://meska54.hashnode.dev/concurrency-in-go-a-deeper-look-into-gos-runtime-scheduler

- https://habr.com/ru/articles/743266/ (RU)

- https://utcc.utoronto.ca/~cks/space/blog/programming/GoSchedulerAndSyscalls — details on how go works with syscalls

- https://morsmachine.dk/netpoller — another article on how go works with syscalls

Similar Posts

LEAVE A COMMENT

Для отправки комментария вам необходимо авторизоваться.