What is a Service Mesh? Introductory article from NginX

The original is here — https://www.nginx.com/blog/what-is-a-service-mesh/

Another small introductory article on Istio you can find here.

A service mesh is a configurable infrastructure layer for a microservices application. It makes communication between service instances flexible, reliable, and fast. The mesh provides service discovery, load balancing, encryption, authentication and authorization, support for the circuit breaker pattern, and other capabilities.

The service mesh is usually implemented by providing a proxy instance, called a sidecar, for each service instance. Sidecars handle inter-service communications, monitoring, security-related concerns – anything that can be abstracted away from the individual services. This way, developers can handle development, support, and maintenance for the application code in the services; operations can maintain the service mesh and run the app.

Istio, backed by Google, IBM, and Lyft, is currently the best‑known service mesh architecture. Kubernetes, which was originally designed by Google, is currently the only container orchestration framework supported by Istio.

Service mesh comes with its own terminology for component services and functions:

- Container orchestration framework. As more and more containers are added to an application’s infrastructure, a separate tool for monitoring and managing the set of containers – a container orchestration framework – becomes essential. Kubernetes seems to have cornered this market, with even its main competitors, Docker Swarm and Mesosphere DC/OS, offering integration with Kubernetes as an alternative.

- Services vs. service instances. To be precise, what developers create is not a service, but a service definition or template for service instances. The app creates service instances from these, and the instances do the actual work. However, the term “services” is often used for both the instance definitions and the instances themselves.

- Sidecar proxy. A sidecar proxy is a proxy instance that’s dedicated to a specific service instance. It communicates with other sidecar proxies and is managed by the orchestration framework.

- Service discovery. When an instance needs to interact with a different service, it needs to find – discover – a healthy, available instance of the other service. The container management framework keeps a list of instances that are ready to receive requests.

- Load balancing. In a service mesh, load balancing works from the bottom up. The list of available instances maintained by the service mesh is stack-ranked to put the less busy instances – that’s the load balancing part – at the top.

- Encryption. The service mesh can encrypt and decrypt requests and responses, removing that burden from each of the services. The service mesh may also improve performance by prioritizing the re-use of existing, persistent connections, reducing the need for the computationally expensive creation of new ones.

- Authentication and authorization. The service mesh can authorize and authenticate requests made from both outside and within the app, sending only validated requests to service instances.

- Support for the circuit breaker pattern. The service mesh can support the circuit breaker pattern, which isolates unhealthy instances, then gradually brings them back into the healthy instance pool if warranted.

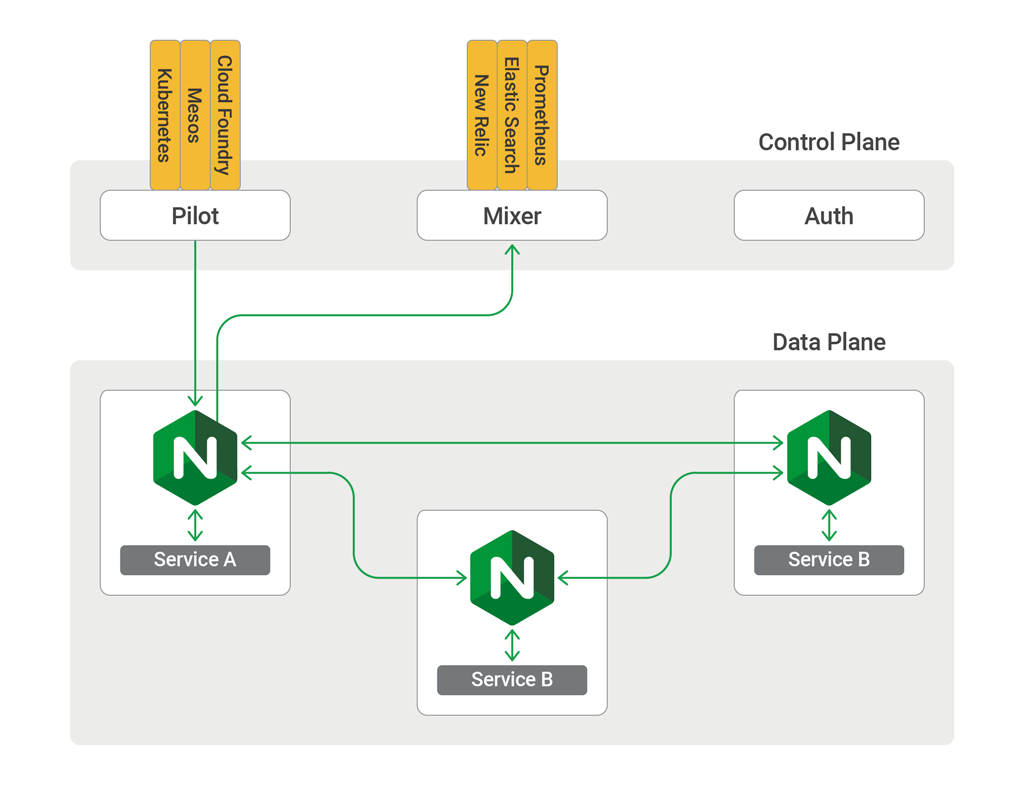

The part of the service mesh where the work is getting done – service instances, sidecar proxies, and the interaction between them – is called the data plane of a service mesh application. (Though it’s not included in the name, the data plane handles processing too.) But a service mesh application also includes a monitoring and management layer, called the control plane.

The control plane handles tasks such as creating new instances, terminating unhealthy or unneeded instances, monitoring, integrating monitoring and management, implementing application-wide policies, and gracefully shutting down the app as a whole. The control plane typically includes, or is designed to connect to, an application programming interface, a command-line interface, and a graphical user interface for managing the app.

NGINX has an Istio‑compatible service mesh called nginMesh. This diagram of the nginMesh architecture shows NGINX in the sidecar proxy role, along with typical Istio components.

A common use case for service mesh architecture is when you are using containers and microservices to solve very demanding application development problems. Pioneers in microservices include companies like Lyft, Netflix, and Twitter, which each provide robust services to millions of users worldwide, hour in and hour out. (See our in-depth description of some of the architectural challenges facing Netflix.) For less demanding application needs, simpler architectures are likely to suffice.

Service mesh architectures are not ever likely to be the answer to all application development and delivery problems. Architects and developers have a great many tools, only one of which is a hammer, and must address a great many types of problems, only one of which is a nail. The NGINX Microservices Reference Architecture, for instance, includes several different models that give a continuum of approaches to using microservices to solve problems.

The elements that come together in a service mesh architecture – such as NGINX, containers, Kubernetes, and microservices as an architectural approach – can be, and are, used productively in non-service mesh implementations. For example, Istio, which was developed as a service mesh architecture, is designed to be modular, so developers can pick and choose what they need from it. With this in mind, it’s worth developing a solid understanding of service mesh concepts, even if you’re not sure if and when you’ll fully implement a service mesh application.

Similar Posts

LEAVE A COMMENT

Для отправки комментария вам необходимо авторизоваться.